I chose to walk through the Softmax activation function for this week, because it was one of the most confusing activation functions that I worked to understand. Thus I have a soft spot (corny laughter) in my heart for it.

The motivation for Softmax

Softmax is used when you have a classification problem with more than one category/class. Suppose you want to know if a certain image is an image of a cat, dog, alligator, or onion. Softmax turns your model's outputs into values that represent probabilities. If is similar to sigmoid (used for binary classification), but works for any number of outputs.

Note: Softmax is not so good if you want your algorithm to tell you that it doesn't recognize any of the classes in the picture, as it will still assign a probability to each class. In other words, Softmax won't tell you "I don't see any cats, dogs, alligators, or onions."

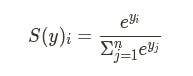

The mathematical formula for Softmax:

Steps:

- I am using S here to stand for the function Softmax, which takes y as its input (y is what we are imagining comes from the output of our machine learning model)

- the subscript i lets us know that we do these steps for all of the y inputs that we have

- the numerator in the formula tells us that we need to raise e to the power of each number y that goes through the Softmax function—this is called an exponential

- An exponential is the mathematical constant e (approximately 2.718) raised to a power.

- the denominator in the formula tells us to add together the exponentials of all the numbers from your model's output

- ∑ (the Greek capital letter Sigma) stands for "sum", which means "add all the following stuff together"

- What did we just find? The probability is numerator (which will be different depending on the number y) divided by the denominator (which will be the same for all the outputs)

Why use exponentials?

Because we are making the numbers exponential, they will always be positive and the will get very large very quickly.

Why is this nifty? If one number y among the inputs to Sigmoid is higher, the exponential will make that number even higher. Thus, Sigmoid will select the class that is the most likely to be in the image out of all the the classes that it can choose from—and that output will be closer to 1. The closer the number is to 1 the more likely that it is of that class

What did we just do to the outputs?

- the outputs will always be in the range between 0 and 1

- the outputs will always add up to 1

- each value of the outputs represents a probability

- taken together the outputs form a probability distribution

A brief example of Softmax at work

- We have our inputs to the Softmax function: -1, 1, 2, 3

- We use each of these inputs on e as an exponent:

e-1, e1, e2, e3

- which gives us approximately these results: 0.36, 2.72, 7.39, 20.09

- we add together the exponentials to form our denominator (we will use the same denominator for each input):

e-1 + e1 + e2 + e3= 30.56

- then we divide each numerator by the denominator that we just found:

- 0.36 / 30.56 = 0.01

- 2.72 / 30.56 = 0.09

- 7.39 / 30.56 = 0.24

- 20.09 / 30.56 = 0.66

- So Softmax turns these inputs into these outputs:

- -1 → 0.01

- 1 → 0.09

- 2 → 0.24

- 3 → 0.66

- and if we add up all the outputs, they equal 1 (try it, it actually works!)

Thanks for reading this week's blog post. I hope you enjoyed it and have a clearer understanding of how the mathematics behind Softmax works.